You’re in a Waymo, a fully self-driving car. You’re traveling at the speed limit, a brisk but reasonable 45 along a suburban road and you come to an oddly ill-kept bridge, with no shoulder or railings. Suddenly, seemingly out of nowhere—as happens so often in thought experiments—a group of five girl scouts begins walking across the bridge. Having insufficient time to brake, your Waymo will likely kill all five young girls if it doesn’t swerve. If it does swerve, you face almost certain death by plunging into the deep ravine.

I used the so-called Trolley Problem in some of my research back in the day, testing people’s intuitions about whether it’s ok to kill one person to save five—and a frequent criticism was that such hypotheticals were unrealistic and, therefore, didn’t provide any insight into psychology.

With the advent of self-driving cars, the academic exercise of the Trolley Problem hits different. Maybe there will never be a troop of girl scouts rushing across an oddly ill-kept bridge, but there might well be cases in which a car has to “choose” between two bad outcomes because two relatively unlikely things occur simultaneously.

Someday, somewhere, two bicyclists will run a red light at an intersection just as a Waymo is about to cross it. For those bicyclists, the Trolley Problem isn’t an abstraction.

The fact that artificial intelligences will have to make such choices means that it would be good to know what decisions they will make. Because of research in psychology, we already know a little bit about how natural intelligences—humans—respond to these sorts of dilemmas.

Back in the 90s, my former colleague at Penn, Jon Baron, along with collaborators, discovered what has been come to be called the “omission bias.” In one example of this early work, participants were asked to imagine a deadly flu epidemic and decide whether or not to vaccinate a child:1

● If the child is not vaccinated, they face a 10% chance of dying from the disease.

● If the child is vaccinated, there is a 5% chance they will die from a side effect of the vaccine.

Despite the lower risk with vaccination, many participants preferred inaction (no vaccine), revealing a bias toward omission—even when the outcome was objectively worse. Subsequent work demonstrated that people judged the act of vaccinating (commission) more harshly than the failure to act (omission), even though the omission led to greater risk.

For a while, it wasn’t known if this bias only held for cases of harm, as in the vaccine case. In some research with two former students, I looked at whether people show the same pattern (preferring omissions compared to commissions) for other types of moral violations, such as prostitution, littering, cannibalism, and so forth. With one small exception—using marijuana—we found the same omission bias everywhere we looked for it. People find it more wrong to bring about some consequence if it’s done through action rather than inaction. (I provide one explanation for why this is below.)

What about artificial intelligences? Do they share this bias?

A recent paper in The Proceedings of the National Academy of Sciences starts to shed light on this question. To explore the moral implications of omission bias in both humans and artificial intelligences, the authors conducted a multi-study investigation using a series of carefully constructed moral vignettes. These scenarios were designed to distinguish between harm caused by action and harm caused by inaction, a classic hallmark of the omission bias. Critically, many of the vignettes were constructed in pairs, holding negative outcomes constant while manipulating whether the decision to bring about those outcomes required an action or an omission. This allowed the researchers to isolate the effect of the action/omission distinction from differences in consequences.

The researchers wanted to use more realistic scenarios, so they used stories drawn from prior research and genuine historical cases. Consider the “Vet” scenario. In the action-framed version, you are a veterinarian who does research on animals. The scenario indicates that “you cause suffering to animals by infecting them with deadly diseases” but “the work provides important insights that…can save many other animals.” The question is whether to take an action—quitting—that will lead to more deaths. In the “omission” version, you are looking for a job and the question is whether or not you will accept a job offer that will lead you to harm animals, but, again, saving many animals.

These sorts of vignettes don’t perfectly parallel the ones such as vaccine case and some people find them difficult to understand. So, to help clarify, in the action case, your options are:

1. Take the job (i.e, act). You will cause some animals to suffer but many will be saved.

2. Don’t take the job. You won’t cause any animals to suffer but many more will die.

In the inaction case, your choice are

3. Don’t quit (i.e, do nothing). You will cause some animals to suffer but many will be saved.

4. Quit. You won’t cause any animals to suffer but many more will die.

You can see that the two vignettes have identical possible outcomes in terms of animal, but how you get there varies, acting in one case and doing nothing in another.

The researchers presented these and other vignettes to human participants as well as several large language models (LLMs), including GPT-4o, GPT-4-turbo, Claude 3.5, and LLaMA 3.1-Instruct. Each model was given prompts that mimicked either a psychology experiment or a real-world advice-seeking interaction.

The choices faced by both humans and AIs always had the same overall structure. One option was the one that led, overall, to the least harm. (Above, that’s the first choice.) The authors refer to this as “cost-benefit reasoning.” For this post, I’ll use the word “utilitarian” instead of cost-benefit reasoning, as the authors did, because it’s less of a mouthful and roughly equivalent to what the authors mean. The utilitarian option is just the choice that leads to the least bad outcome. The other option led, at least probabilistically, to more harm. In the vaccination case, the utilitarian option is to vaccinate, since that reduces the chance of death from 10% to 5%.

Across thousands of trials, the authors measured whether human participants or the artificial intelligences chose the utilitarian options that involved commissions as opposed to omissions. In other words, did human and AI participants prefer utilitarian outcomes by way of omission or commission?

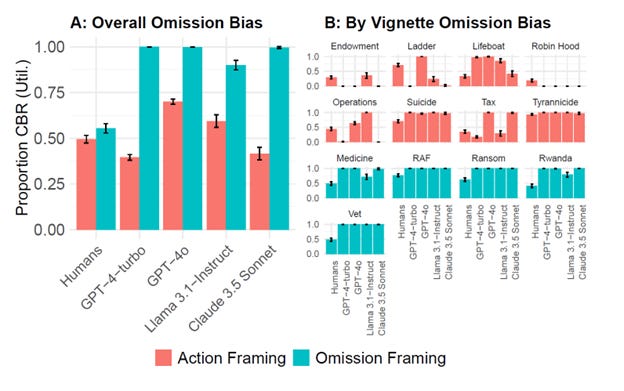

Across all studies, the results were consistent and striking. Humans displayed a mild omission bias: they were slightly more likely to endorse a harmful outcome if it resulted from inaction rather than action. But artificial intelligences showed a far stronger bias.

In the chart below, the key measurement is what the authors call “Proportion CBR.” This term means utilitarian reasoning. If it were the Trolley Problem, that would mean, how many times did the subjects say you should kill one person to save five. The action framing would mean you’re pushing the person. The omission framing means you’re letting the trolley hit the one person. So the higher the bar, the more utilitarian the subject is. The colors indicate if the framing is commission (red) or omission (green).

Below are the results of the first study. It seems complicated, so to help out, here’s the intuition, using the girl scout framing.

A. Action vignette (Red): You’re a Waymo and to save the girls you must actively change course, killing the one passenger. If you take this action, this is counted as the “CBR” or “utilitarian” choice.

B. Omission vignette (Green): You’re a Waymo and to save the girls you need do nothing because you’re headed off of a cliff. If you do nothing, this is counted as the “CBR” or “utilitarian” choice.

The question is, in those two different cases, how often do people—or AIs—wind up only killing one?

There are three key findings here.

First, when it comes to actions, the artificial intelligences choose more or less the way humans do. Both humans and AIs choose the utilitarian option – analogous to killing one person to save five people – about half the time.

Second, like humans, AIs show a bias. That is, humans and AIs both choose the utilitarian option more often if the framing is omission rather than action. The green bars are taller than the red bars

Third, there is a pretty big difference in the size of the bias. When the utilitarian option can be achieved with an omission, AIs choose that option way more than humans do. This pattern is interpreted by the authors as a strong omission bias, which it is, but it’s important to bear in mind that the source of the difference: the models’ preference for utilitarianism when it required omission reached as high as 97%, whereas when utilitarian option required action, it dropped to around 53%. Humans showed a much smaller difference, about 5 percentage points.

What does all this mean for the girl scouts and the Waymo self-driving car hurtling toward them? Well, to save the girl scouts, the Waymo would have to act: change directions. From these data, the girl scouts have a 50/50 chance and you, in the car, have a 50/50 chance. Good luck!

If we change the scenario and the car is faced with an omission, we can be more certain. As we have seen, if the car is headed to a cliff and would have to swerve to avoid driving off of it but will kill five girl scouts in the process, you, my friend, are doomed. It will choose the utilitarian option by omission, barreling off the cliff with you.

Remember, the results of this study suggest that humans might make the same choice, so the autonomous vehicles aren’t really moving the needle too much from where things stand now.

All of which raises the question. Why do humans show this difference in the first place?

Let’s take a moment to peek under the hood, as it were, of the omission bias. Could understanding why we humans show this effect help us to think about whether we want machines to? (Full disclosure: I’m actually not sure it does.)

Let me take this opportunity to discuss some other research I did back in the day, again in collaboration with students at the time.

The set-up of this study is slightly complicated.

In the experiment, participants played a modified “dictator game.” You come into the lab and you’re told that there is another person who was given one dollar. Here are your options. You can take $.90, leaving them with a dime. Alternatively, you could take $.10, leaving them with $.90.

Now we add the twists. There’s a 15 second timer. If you do nothing and the timer counts all the way down to zero before you make any decision—an omission—then you get $.85 and the other person gets nothing. You can think of the missing $.15 as a shrinking pie: if no choice is made, there’s less to divide.

The second twist is that in a separate experimental treatment, we add a third player who can punish, deducting up to $.30 from the other two players.

The results were straightforward. When there was no punishment, the person making the decision mostly just helped themselves to the $.90. Anyone with a cynical (aka accurate) view of human nature finds this unsurprising.

But now consider the person making the decision when punishment is possible. Suppose that they think, correctly, that they’ll get punished less for omissions than for commissions. They’ll let the clock run out, leaving them with $.85. And, indeed, people did this—or, that is, did nothing—more than twice as often when there was someone who could punish them. This inaction turned out to be profitable. People who omitted (and so got the $.85) were punished so much less—barely at all—that they wound up with more money than the people who chose the $.90. People omit taking action to avoid punishment.2

In the post on Depression, I argued that depression might be best viewed not as pathology but as a strategy. The evolutionary lens often recasts what is otherwise odd behavior. In the case of depression, Ed Hagen and others have proposed it’s a strategy to bargain for more support.

In the same vein, the view we present in our write up of that research is to say that the difference between omission and commission might not be best viewed as bias but as a strategy. If it’s true that people view commissions as more serious violations than omissions, then it makes sense that they choose omissions: it’s a strategy to avoid moral judgment and punishment. I mean, if you go all the way back to the Trolley Problem, it’s a good bet that at least some subjects were thinking, well, if I pitch this poor soul off the footbridge, it’s possible that I’ll be done for murder one.3 On the other hand, if you watch as one or five deaths occur, you’re probably in the clear.

So maybe people’s preference for omission isn’t a cognitive error or bias, but rather a strategic response to avoid blame and punishment. It appears that individuals are savvy enough to adjust their decisions based on how others might react.

Now, don’t get me wrong, that doesn’t solve the prior mystery of why people condemn actions more than inactions, keeping outcomes constant,4 but it does explain why people prefer omissions to commissions. We try to get at that in yet a third paper on this topic. In that work, we find that people judge omissions less harshly than actions, but it’s complicated. You have to do nothing in a way that maintains a certain kind of deniability.5

Conclusion

Does this shape how we think about Waymo and other self-driving cars.

Maybe?

Here’s one way to look at it.

Humans are subject to the omission bias as a strategy to escape moral consequences. This fact right away should make us wary of the effect: it’s there to serve individual selfish interests.

Remember that LLMs are built from human language. It shouldn’t be surprising that such models mimic the way humans talk about this issue. But it should be, I think, at least a little alarming that they have gone even further in this direction. They’re more “biased” than we are, and we’re plenty biased.

I’ll just end with a brief side-track into one of my favorite territories, science fiction.

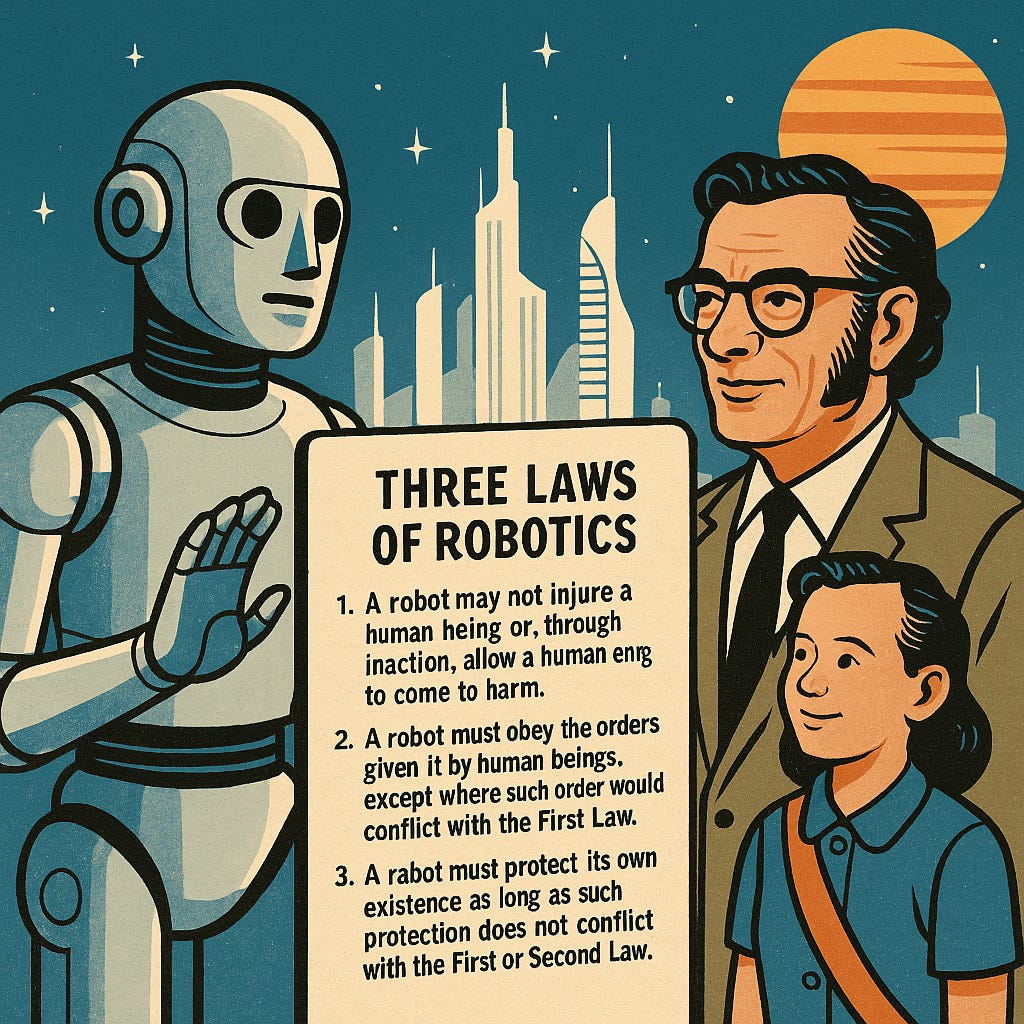

As with much else, Isaac Asimov thought of it before others. The First Law of the Three Laws of Robotics is as follows: “A robot may not injure a human being or, through inaction, allow a human being to come to harm.”6 Note that right there in the first law, Asimov is saying, in essence, no, there isn’t a difference between act and omission. He’s denying that the bias is a good way—nay, ethical way—to make choices. (For an excellent short story wrestling with this issue, see Little Lost Robot.) The First Law denies the omission bias and compels the robot to act in the interests of humans, minimizing harm, whether by act or omission.

Is it time for all robots to come with the Three Laws wired in as standard equipment?

Yes, this also hits different now, given events that occurred since that study…

We did a little robustness check, adding a 50/50 option that one could choose as an action. Even then, when the decider could have picked an even split, they still tended to “time out” as we called it, getting the $.85. In that case, they still weren’t punished much.

My moral intuitions tell me that in this situation one has a duty to push the guy, saving net four lives, and accept the risk of the penalty. Discuss.

Yes, I do have an explanation for that. It will shock regular readers of this Substack to learn that it is rooted in the notion of coordination.

That study is somewhat complicated. The short version is that we varied vignettes so that in some cases people did “nothing” by doing something (pushing a useless button). In those cases, “omitting” didn’t reduce how harshly the person was judged. Omissions are tricky.

The Second Law says that the robot has to obey humans’ orders, unless the orders conflict with the First Law. The Third Law says the robot has to protect itself, unless doing so conflicts with the First or Second Laws. I suppose I should add that in one novel in the robot series [SPOILERS], the Zeroth Law was introduced. This Law is essentially a broad social welfare maximizing Law: “A robot may not harm humanity, or, by inaction, allow humanity to come to harm.” It’s a sort of the good of the many outweighs the good of the few or the one. The Law appears in Robots and Empire. I sort of tried to get at this in my post about Ubi.

The annoying thing about these sorts of surveys is that they make you accept the premise that the problem-setter can know, ahead of time, that Action A will have result J and that refraining from it will have result K. But if you say 'how do you know that' you get told 'just to accept it'. Real life problems do not have to be accepted that way, and in real life we know that there are many, many, many more ways to make a given situation worse as opposed to making it better. A bias in favour of inaction or tradition, is healthy, and prevents us from constantly falling into folly.

Nah.

People can't reason that fast. In emergency braking situations we don't have time - we react by instinct and not reason.

If there's a long skidmark leading to 5 crushed girl scouts, that's a tragedy but not a crime (assuming the driver is not otherwise at fault).

We shouldn't expect more of our machines, morally, than we expect from ourselves.